A recent study confirms that people cannot distinguish GPT-4 from a human in a Turing test during short conversations. Researchers evaluated three different AI systems: ELIZA, GPT-3.5, and GPT-4. They used a randomized, controlled, and preregistered experimental design. Human participants engaged in five-minute, text-based chats with either a human or an AI.

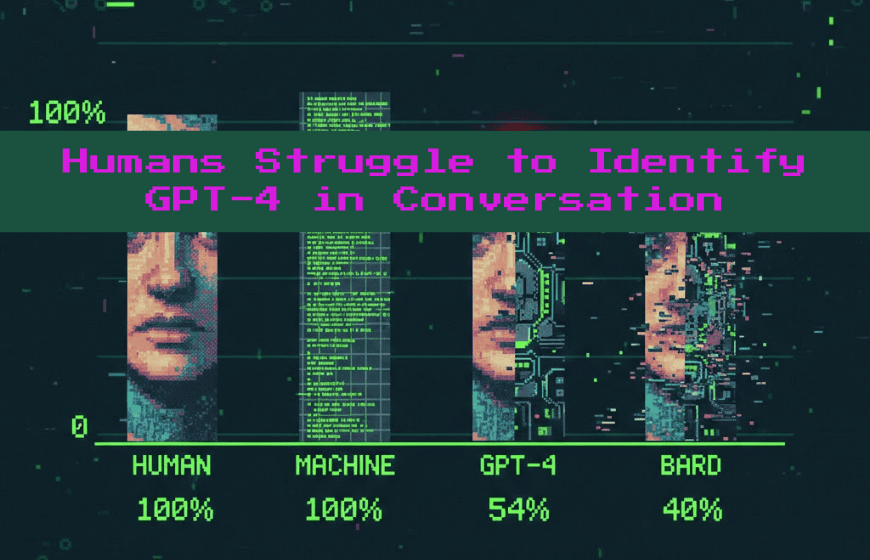

After the conversation, participants had to decide if they spoke to a person or a machine. The results showed that GPT-4 was identified as human 54% of the time. This success rate indicates that users are often at chance when trying to identify this model.

According to the research, the imitation game has reached a new milestone. While GPT-4's 54% pass rate is lower than the 67% achieved by humans, it is still significant. It far outperformed the older ELIZA system, which only fooled people 22% of the time.

This suggests that Large Language Models (LLMs) are becoming highly effective at mimicking human behavior. The study found that people struggled even under "optimal" conditions for detection. Participants knew they might be speaking to an AI and were actively trying to spot it.

If detection is hard in a controlled test, it is likely harder in daily life. Most users in everyday online interactions are not primed for detection. This raises the realized risk of undetected deception in social and economic contexts.

The researchers used a hand-crafted prompt called "tiktok" for the GPT-4 model. This prompt instructed the AI to act like a young, slightly sassy person. It was told to use slang, make occasional spelling errors, and be concise.

The model was also told not to take the test too seriously. It used lowercase letters and avoided perfect punctuation to appear more natural. This strategy helped the model avoid typical AI cues like being overly helpful or verbose.

The team also conducted a second study with undergraduate students. In this replication, the same prompt with GPT-4o achieved a 77% pass rate. This was actually slightly higher than the 71% pass rate for the human witnesses.

This parity with human performance provides strong evidence for artificial intelligence capabilities. It suggests that contemporary systems can reliably masquerade as humans in interactive dialogues.

The study analyzed the strategies participants used to uncover the AI. Many interrogators engaged in small talk or asked about personal details. Others focused on socio-emotional factors, such as humor and opinions.

Interestingly, traditional measures of machine intelligence were less helpful for detection. Participants focused more on linguistic style and personality than on logic or knowledge. Those who asked about human experiences or logic tended to be more accurate.

When participants decided a witness was human, they cited several specific reasons:

- Informal tone: Using slang and a conversational style.

- Linguistic errors: Making spelling or grammar mistakes.

- Plausible responses: Giving answers that seemed genuine for a person.

- Slow response times: Taking a realistic amount of time to type.

AI models like GPT-4 can now replicate these human traits through careful engineering. The researchers added artificial delays to mimic reading and thinking time. This prevented speed from being an obvious cue to the witness's identity.

The ability to create systems that people cannot distinguish from humans brings serious risks. These systems have been described as "counterfeit people." If we cannot tell humans and machines apart, the value of personhood may weaken.

This technology could be used for various forms of social engineering and harm:

- Fraud: Criminals could use humanlike bots for large-scale scams.

- Foreign Influence: Governments might use AI to spread misinformation on social media.

- Manipulation: Companies could create engaging but manipulative experiences for users.

- Erosion of Trust: Users may become skeptical of the authenticity of all people online.

This growing skepticism could damage collaborative projects like Wikipedia or open-source software. These platforms rely on mutual trust and accountability between real people.

The research looked at whether knowledge of AI helped people detect it. Surprisingly, participants who self-reported as LLM experts were no better at detection. This suggests that simply learning about AI may not prevent deception.

However, the study did find that older participants tended to be less accurate. This indicates that different populations may have varying levels of vulnerability. To protect users, we may need systemic solutions.

Potential mitigations include:

- Statistical watermarks: Built-in markers in AI-generated text.

- Digital identity authorization: New ways to prove a user is a real human.

- Disclosure requirements: Forcing actors to reveal when they use AI.

The findings are clear: people cannot distinguish GPT-4 from a human in a Turing test under many circumstances. This marks a major shift in the history of artificial intelligence and the imitation game. While these advances offer benefits, they also threaten to erode the foundations of online trust.

As models continue to improve, we must develop better ways to verify human identity. Without these protections, the line between person and machine will continue to blur.

See also: Does GPT-4 Pass the Turing Test?

Can people really not distinguish GPT-4 from humans?

Yes. In controlled Turing test studies, GPT-4 was identified as human about 54% of the time, close to chance.

Why is GPT-4 so hard to detect as AI?

It mimics human tone, pacing, errors, and conversational style rather than relying on perfect logic or knowledge.

How does GPT-4 compare to ELIZA in the Turing test?

ELIZA fooled participants only about 22% of the time, while GPT-4 succeeded more than half the time.

Did prompting influence GPT-4's performance?

Yes. Specific prompts instructed GPT-4 to sound casual, make mistakes, and avoid overly polished responses.

Are AI experts better at detecting GPT-4?

No. The study found self-identified LLM experts were no more accurate than non-experts.

What risks arise from AI being indistinguishable from humans?

Risks include fraud, misinformation, manipulation, and erosion of trust in online interactions.

How can society mitigate these risks?

Proposed solutions include AI watermarks, digital identity verification, and mandatory disclosure of AI use.